In this project I constructed a Convolutional Neural Network (CNN), using TensorFlow,

for the purpose of categorizing flight anomalies during the final

approach phase of an aircraft, specifically within 160 seconds after

descending below 1000 feet.

I have been fascinated by the realm of flight for quite some time, and

embarking on this project felt like an exciting endeavor. Flight represents

one of the most remarkable and continually evolving achievements, with

notable advancements in aviation technology and aerospace engineering

throughout the 21st century. From cutting-edge aircraft designs to innovations

in propulsion and navigation systems, the ongoing progress in the field of

flight has truly defined the spirit of exploration and human ingenuity in

this era.

I have experience building Machine Learning (ML) models in TensorFlow and other

machine learning libraries like Scikit-learn. In this project I utilized

publicly available flight data, from NASA, to build a CNN ML model using

aircraft data pertaining to the final approach 160 seconds after

descending below 1000 feet.

Researchers utilized de-identified aggregate flight data to proactively

identify and analyze trends in the National Airspace System (NAS),

allowing the aviation community to allocate resources effectively

and enhance overall safety. The shared data, recorded from a specific

regional jet over three years, includes detailed information on aircraft

dynamics and system performance without revealing specific airline or

manufacturer details. NASA has made these records publicly accessible.

The data and other resources can be found here:

-- NASA DASHlink Sample Flight Data Description

-- NASA DASHlink Sample Flight Data

Data Overview:

Code Editor:

# -*- coding: utf-8 -*-

"""

Created on Sat Oct 28 16:28:32 2023

@author: ang

"""

# Imports

import pandas as pd

import numpy as np

from sklearn.model_selection import train_test_split

from imblearn.over_sampling import RandomOverSampler

pd.options.display.max_columns = None

'''

This data contains a 160 second window snapshot of ~99K flights on final

approach when the flights are crossing 1,000 ft before touchdown. There

are 3 different anomaly types and 1 nominal class.

"The posted files contain actual data recorded onboard a single type of

regional jet operating in commercial service over a three-year period.

While the files contain detailed aircraft dynamics, system performance,

and other engineering parameters, they do not provide any information

that can be traced to a particular airline or manufacturer."

Cited;

https://c3.ndc.nasa.gov/dashlink/resources/1018/

https://c3.ndc.nasa.gov/dashlink/projects/85/

'''

# Open the data packed in the .npz; this is a zipped Numpy file saved with Numpy

numpy_data_file = np.load('DASHlink_full_fourclass_raw_comp.npz', allow_pickle=True)

Python Console:

In []: numpy_data_file.files

Out []: ['data', 'label']

The 'label' file is the assigned label for each array of flight data.

This might sound confusing at first but when you see it it starts to make

much more sense. Let us explore the structure and intricacies of the data.

Data Structure and Details

Python Console:

# First we can assign both arrays 'data' and 'label' to their own variables.

In []: np_arr_data = numpy_data_file['data']

In []: np_arr_labels = numpy_data_file['label']

# We can now check the shapes of both arrays.

In []: np_arr_data.shape

Out[]: (99837, 160, 20)

In []: np_arr_labels.shape

Out[]: (99837,)

# This is the first array in the array of arrays at index 0 of np_arr_data.

In []: np_arr_data[0]

Out[]:

array([[81.26119 , 82.652336 , -8.111792 , ..., -1.0818703 ,

0.9723786 , 12.625183 ],

[79.604095 , 81.0157 , -7.6446114 , ..., -0.70481956,

0.7700771 , 11.893839 ],

[81.30211 , 80.7702 , -7.552573 , ..., -0.24044622,

0.54393727, 12.559112 ],

...,

[78.84715 , 81.93631 , 0.14975742, ..., 0.68785334,

1.0827516 , 9.047807 ],

[83.900276 , 84.698135 , 1.3170995 , ..., 0.51355934,

1.0503458 , 8.270673 ],

[82.5705 , 77.12868 , 0.6525055 , ..., 0.29619697,

1.0963339 , 9.127736 ]])

# This is the shape of the array at index 0.

In []: np_arr_data[0].shape

Out[]: (160, 20)

'''

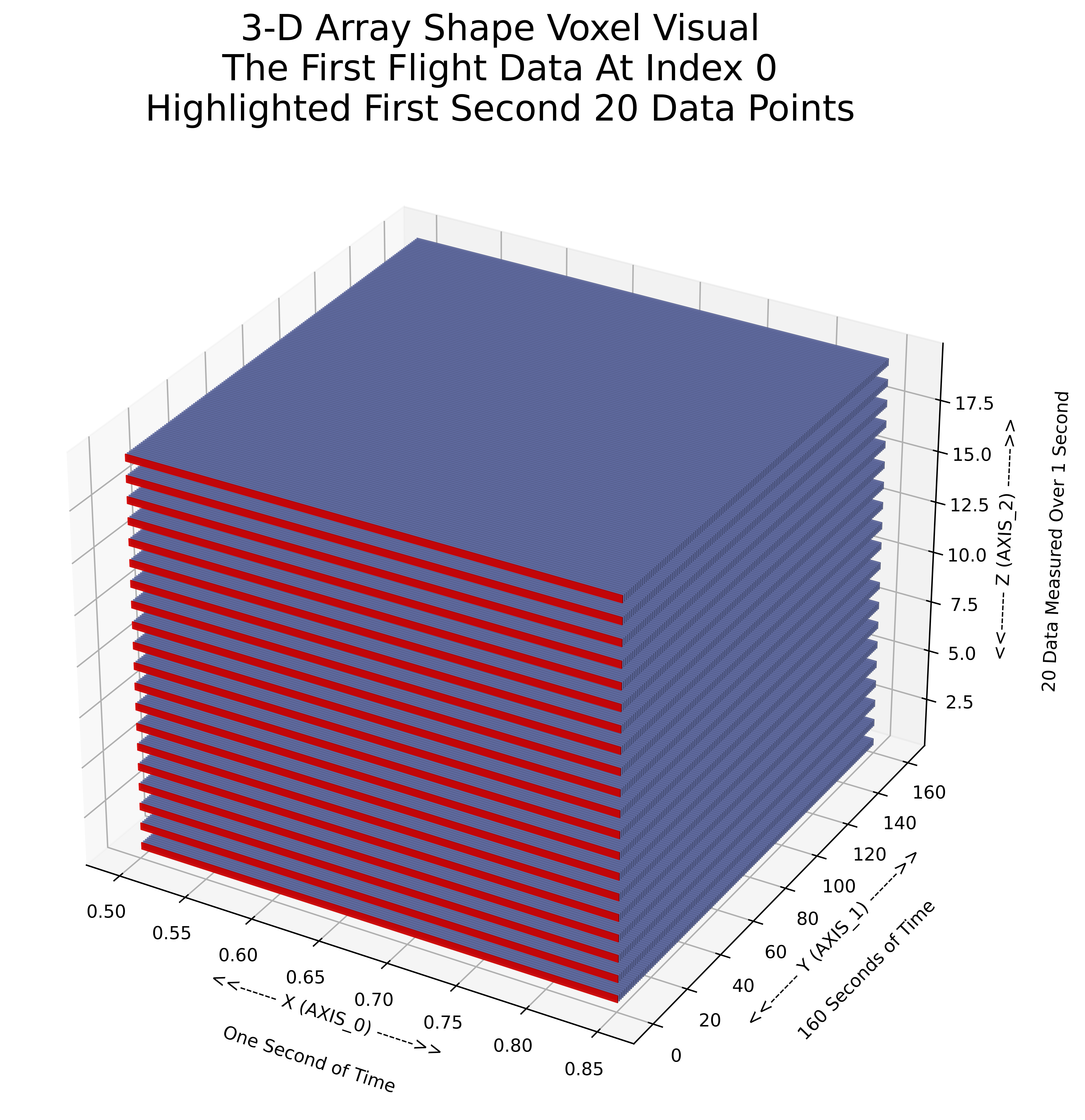

So, why is np_arr_data three dimensions whereas np_arr_labels is one?

This is because the np_array_data consists of 99837 flights, with 160 seconds of

data from each flight, and 20 variables measured per-second per flight.

The np_arr_labels consists of how each of the respective 99837 flights were classified.

According to the PDF documentation 0 is Nominal, 1 is Speed High, 2 is Path High,

and 3 is Flaps Late Setting.

The index of the np_arr_data and np_arr_labels are in tandem. So, we can see how

the first flight at index 0 was classified.

'''

In []: np_arr_labels[0]

Out[]: 0.0

# How was the last flight in classified?

In []: np_arr_labels[-1]

Out[]: 3.0

'''

Above we see the first flight was classified as Nominal (0.0) and the last flight

was classified as Flaps Late Setting (3.0).

Do not mind that the values are floats.

For now it does not matter.

'''

'''

The PDF documentation was well written by the contributor on the NASA DASHlink.

We can clearly understand that there exist 20 variables and their respective index

in the columns. Each variable corresponds to a measured instrument on the aircraft.

To further examine the data to a more human readable format I am going to place

the first array data into a Pandas DataFrame.

'''

# To start we will need all the variable columns into a list of strings.

# These are detailed, by index, in the PDF documentation.

In []: str_var_cols = [

'AILERON POSITION LH',

'AILERON POSITION RH',

'CORRECTED ANGLE OF ATTACK',

'BARO CORRRECT ALTITUDE LSP',

'COMPUTED AIRSPEED LSP',

'SELECTED COURSE',

'DRIFT ANGLE',

'ELEVATOR POSITION LEFT',

'T.E. FLAP POSITION',

'GLIDESLOPE DEVIATION',

'SELECTED HEADING',

'LOCALIZER DEVIATION',

'CORE SPEED AVG',

'TOTAL PRESSURE LSP',

'PITCH ANGLE LSP',

'ROLL ANGLE LSP',

'RUDDER POSITION',

'TRUE HEADING LSP',

'VERTICAL ACCELERATION',

'WIND SPEED'

]

'''

Now we can apply the columns to the first array at index 0 to yield a Pandas DataFrame

and better understand the structure of the data.

'''

In []: df_arr_slice_0 = pd.DataFrame(np_arr_data[0,:,:],columns=str_var_cols)

In []: np_arr_labels_slice_0 = np_arr_labels[0]

In []: df_arr_slice_0 # All the data captured for the first flight at index 0 in np_arr_data to a DataFrame.

Out[]:

AILERON POSITION LH AILERON POSITION RH CORRECTED ANGLE OF ATTACK \

0 81.261190 82.652336 -8.111792

1 79.604095 81.015700 -7.644611

2 81.302110 80.770200 -7.552573

3 82.345470 83.900276 -8.395265

4 81.874930 82.754620 -7.854284

.. ... ... ...

155 79.542725 82.038600 -3.399119

156 80.258750 80.136000 -2.238594

157 78.847150 81.936310 0.149757

158 83.900276 84.698135 1.317100

159 82.570500 77.128680 0.652506

BARO CORRRECT ALTITUDE LSP COMPUTED AIRSPEED LSP SELECTED COURSE \

0 1969.61740 155.571400 -2.109358

1 1955.69950 154.512050 -2.109358

2 1940.02670 153.328670 -2.109358

3 1924.54930 150.888180 -2.109358

4 1905.36700 150.694610 -2.109358

.. ... ... ...

155 369.51834 116.645760 -2.109358

156 357.74258 116.160164 -2.109358

157 346.11517 114.936070 -2.109358

158 335.11508 114.101250 -2.109358

159 321.76358 114.756260 -2.109358

DRIFT ANGLE ELEVATOR POSITION LEFT T.E. FLAP POSITION \

0 -0.692778 -4.952854 3065.0

1 -0.867216 -5.198349 3065.0

2 -1.424093 -4.830105 3065.0

3 -1.141912 -4.625526 3065.0

4 -0.724660 -4.400490 3065.0

.. ... ... ...

155 -1.466813 -2.436520 3701.0

156 -1.488424 -1.229500 3701.0

157 -1.614193 -2.027359 3701.0

158 -1.529666 -2.047817 3701.0

159 -0.819070 -1.004459 3701.0

GLIDESLOPE DEVIATION SELECTED HEADING LOCALIZER DEVIATION \

0 0.01794 -2.109358 0.006664

1 0.01950 -2.109358 0.007448

2 0.01872 -2.109358 0.009604

3 0.01677 -2.109358 0.009408

4 0.01677 -2.109358 0.009408

.. ... ... ...

155 -0.04017 -2.109358 -0.010192

156 -0.08034 -2.109358 -0.006664

157 -0.12519 -2.109358 -0.003724

158 -0.16380 -2.109358 -0.005684

159 -0.14664 -2.109358 0.003136

CORE SPEED AVG TOTAL PRESSURE LSP PITCH ANGLE LSP ROLL ANGLE LSP \

0 70.741180 985.42550 -3.662261 0.785912

1 70.717750 985.52030 -3.665276 0.046774

2 70.702760 985.41650 -3.940319 0.804820

3 70.741590 984.81710 -4.275129 1.077102

4 70.570450 985.23065 -4.241483 1.654806

.. ... ... ... ...

155 79.387634 1024.64040 -1.882211 -0.315151

156 79.338200 1024.79610 -1.309721 -0.224883

157 79.454490 1024.89700 0.100387 0.674243

158 79.324840 1024.83230 0.681758 1.592121

159 79.679760 1025.56470 1.094914 0.962178

RUDDER POSITION TRUE HEADING LSP VERTICAL ACCELERATION WIND SPEED

0 -0.390141 -1.081870 0.972379 12.625183

1 -0.756234 -0.704820 0.770077 11.893839

2 -1.325632 -0.240446 0.543937 12.559112

3 -0.326884 -0.191627 1.062817 10.542998

4 0.129545 -0.528425 0.867628 9.713539

.. ... ... ... ...

155 -1.063202 0.573140 0.987543 9.934987

156 -0.209496 0.299006 0.990345 8.879451

157 -0.894619 0.687853 1.082752 9.047807

158 -1.356709 0.513559 1.050346 8.270673

159 -4.275764 0.296197 1.096334 9.127736

[160 rows x 20 columns]

In []: np_arr_labels_slice_0 # The data captured by the aircraft is labeled Nominal.

Out[]: 0.0

'''

So, now we have a clearer picture of what we are dealing with. Specifically, 20

features measured, over 160 seconds of time, for 99837 flights. Or, potentially

99837 DataFrames—to have that many DataFrames is not practical and is only used

here as an example of one array in np_arr_data.

'''

Test Train Split

Python Console:

'''

The first step we need to take here is very important. We must flatten the data.

This is for two very specific reasons. One, our CNN model is going to accept a flattened

array as input when we build it. Two, the data may be imbalanced and we need an

array with 2 dimensions when we use RandomOverSampler, from Scikit-learn,

to fix the imbalance.

'''

# We can take the array and reshape it from (99837, 160, 20) to (99837, 3200)

In []: np_arr_data = np_arr_data.reshape(99837, 3200)

'''

The reason that it is possible to reshape the array from (99837, 160, 20) to

(99837, 3200) is that we are not changing anything except the shape. The validity

of the data is unaffected. Take for example the following;

'''

# The first array in the data.

In []: np_arr_data[0,:,:]

Out[]:

array([[81.26119 , 82.652336 , -8.111792 , ..., -1.0818703 ,

0.9723786 , 12.625183 ],

[79.604095 , 81.0157 , -7.6446114 , ..., -0.70481956,

0.7700771 , 11.893839 ],

[81.30211 , 80.7702 , -7.552573 , ..., -0.24044622,

0.54393727, 12.559112 ],

...,

[78.84715 , 81.93631 , 0.14975742, ..., 0.68785334,

1.0827516 , 9.047807 ],

[83.900276 , 84.698135 , 1.3170995 , ..., 0.51355934,

1.0503458 , 8.270673 ],

[82.5705 , 77.12868 , 0.6525055 , ..., 0.29619697,

1.0963339 , 9.127736 ]])

# Remember the shape of the arrays in the data

In []: np_arr_data[0,:,:].shape

Out[]: (160, 20)

# All we are doing is flattening the data from a shape of (160, 20) to (3200,)

In []: np_arr_data[0,:,:].flatten()

Out[]:

array([81.26119 , 82.652336 , -8.111792 , ..., 0.29619697,

1.0963339 , 9.127736 ])

In []: np_arr_data[0,:,:].flatten().shape

Out[]: (3200,)

'''

The ability to reshape the array from (99837, 160, 20) to (99837, 3200) is feasible

because the only alteration is in the array's structure, not its content validity.

The original shape is (160, 20) for any single array, and by flattening it we obtain

a shape of (3200,). Despite this transformation, the data's integrity remains intact.

This reshaping process does not compromise the data as the computer still interprets

the label as 0, regardless of whether the data is in a shape of (160, 20) or (3200,).

Electronically, the data remains associated with the correct label, enabling

the model to correctly understand that the information it processes is still

classified as 0. This process is actually used quite often, especially for image

or text data used in various types of models.

'''

'''

Now that we have flattened the data we can split the data into train and validation

sets. Scikit-learn offers a very nice utility to accomplish this.

'''

x_train, x_val, y_train, y_val = train_test_split(np_arr_data,

np_arr_labels,

test_size=0.2,

random_state=42)

Data Balance Check

Python Console:

'''

The critical consideration at this point is the balance of the data. When

constructing a Convolutional Neural Network, a crucial question to address is

whether the data is well-balanced. Imbalanced data will complicate training of the

model. Thus, we need to check the balance of the label data in, and only in, the

training set. We can do this by placing the label data into a Pandas DataFrame

and checking the counts of the values.

The label data only has one column where each value corresponds to how any given

array at any given index was classified. So, lets assign the label data to a

DataFrame.

'''

In []: df_labels_check_y_train = pd.DataFrame(y_train,columns=['label'])

In []: df_labels_check_y_train.head()

Out[]:

label

0 0.0

1 1.0

2 0.0

3 0.0

4 0.0

In []: df_labels_check_y_train.tail()

Out[]:

label

79864 0.0

79865 0.0

79866 0.0

79867 0.0

79868 0.0

'''

So, now that we have the label data into a nice DataFrame we can verify the balance

of the labels in the data by looking at the value counts of each label.

'''

In []: df_labels_check_y_train.value_counts()

Out[]:

label

0.0 71730

1.0 5620

2.0 1773

3.0 746

dtype: int64

'''

From viewing the value counts we can see that a majority of the labels are

Nominal (0.0). But this is still very imbalanced data. We can prove it using a

simple probability calculation;

P = num_of_pos_events_for_x / total_num_of_possible_events

So, we can get this fairly easily for each label.

'''

# We can get the total_num_of_possible_events by;

In []: df_labels_check_y_train.value_counts().sum()

Out[]: 79869

# Now iterate through the value_counts() and get the probability of randomly selecting a label.

In []: [round(i/df_labels_check_y_train.value_counts().sum(),3) for i in df_labels_check_y_train.value_counts()]

Out[]: [0.898, 0.07, 0.022, 0.009]

'''

Above shows proof that the data is imbalanced. If the data was more balanced we

would see the probabilities of each label being much closer to each other. Ideally

each should be closer to a probability of .25.

So, our question becomes, "how can we balance this data?"

'''

Balance by Random Oversampling

Python Console:

'''

One accepted method to balance imbalanced data is to use Random Oversampling. This

takes the minority classes and randomly duplicates them until a balanced dataset

is achieved. This will greatly improve the training of the model in terms of computational

effort.

Scikit-learn offers an easy to use utility to do this—RandomOverSampler().

I should state again here, that the only data that should be Randomly Oversampled

is the training data. Do not manipulate the validation data. Leave the validation

data alone.

'''

# Instantiate an instance of RandomOverSampler()

ros = RandomOverSampler()

# Instantiates resampled training data for x_train and y_train

x_train_res, y_train_res = ros.fit_resample(x_train, y_train)

'''

We can now run a check and see the results of the Random Oversample.

'''

In []: df_y_train_res = pd.DataFrame(y_train_res,columns=['label'])

# Check the value counts.

In []: df_y_train_res.value_counts()

Out[]:

label

0.0 71730

1.0 71730

2.0 71730

3.0 71730

dtype: int64

'''

We can see that we now have very balanced training data.

'''

Build the CNN Model

Code Editor:

# -*- coding: utf-8 -*-

"""

Created on Sat Nov 4 16:24:30 2023

@author: ang

"""

# Imports

import numpy as np

import tensorflow as tf

from tensorflow.keras import layers

from tensorflow.keras import losses

# Load the train/val data.

x_train = np.load('./data/x_train_res.npy', allow_pickle=True)

y_train = np.load('./data/y_train_res.npy', allow_pickle=True)

x_val = np.load('./data/x_val.npy', allow_pickle=True)

y_val = np.load('./data/y_val.npy', allow_pickle=True)

'''

Next we will build the model with layers. Unfortunately, there is no one size fits

all method when it comes to layering Deep Neural Networks. For this task the training

seemed to improve when I added enough dense layers. This is possibly due to the complexity

of the data. This aligns with the idea that deeper architectures, in certain cases,

can better represent complex relationships and features within a dataset.

'''

_model = tf.keras.Sequential([

layers.Flatten(),

layers.Dense(128, activation="relu", name="dense_1"),

layers.Dense(128, activation="relu", name="dense_2"),

layers.Dense(128, activation="relu", name="dense_3"),

layers.Dense(128, activation="relu", name="dense_4"),

layers.Dense(128, activation="relu", name="dense_5"),

layers.Dense(128, activation="relu", name="dense_6"),

layers.Dense(128, activation="relu", name="dense_7"),

layers.Dense(128, activation="relu", name="dense_8"),

layers.Dense(128, activation="relu", name="dense_9"),

layers.Dense(128, activation="relu", name="dense_10"),

layers.Dense(128, activation="relu", name="dense_11"),

layers.Dense(128, activation="relu", name="dense_12"),

layers.Dense(4, activation="softmax", name="predictions"),

])

'''

Then we compile the model. The two metrics we are interested in here are loss and

Subset Accuracy. Where subset accuracy compares the predicted class indices to the

true class indices. It can do so because when the model predicts a value it returns

a probability for each class—the highest of within is the predicted class.

Our loss metric will be SparseCategoricalCrossentropy, which assesses the disparity

between the predicted probability distribution and the true class distribution,

specifically designed for multi-class classification models with integer-encoded

labels.

'''

# MODEL 'Accuracy' AND SparseCategoricalAccuracy AND SubsetAccuracy HERE ARE SYNONYMOUS

_model.compile(

loss=losses.SparseCategoricalCrossentropy(from_logits=True),

optimizer='adam',

metrics=[

tf.metrics.SparseCategoricalAccuracy(name="SubsetAccuracy"),

])

'''

Next we train the model. An epoch is one complete pass through the training data.

Each epoch allows the model to adjust the weights and biases to minimize loss and

improve accuracy.

'''

_epochs = 3

bin_history = _model.fit(

x_train,

y_train,

epochs=_epochs,

validation_data=(x_val,y_val)

)

Python Console:

Epoch 1/3

8967/8967 [=============] - 50s 5ms/step - loss: 0.5528 - SubsetAccuracy: 0.7885 - val_loss: 0.9054 - val_SubsetAccuracy: 0.5335

Epoch 2/3

8967/8967 [=============] - 47s 5ms/step - loss: 0.5150 - SubsetAccuracy: 0.8001 - val_loss: 0.3837 - val_SubsetAccuracy: 0.8533

Epoch 3/3

8967/8967 [=============] - 47s 5ms/step - loss: 0.3752 - SubsetAccuracy: 0.8695 - val_loss: 0.3976 - val_SubsetAccuracy: 0.8815

Done.

'''

In the training process, spanning three epochs, the model demonstrates a consistent

decrease in training loss and an improvement in subset accuracy, reaching 86.95% by

the third epoch. The validation results also showcase positive trends, with a notable

increase in validation subset accuracy from 53.35% to 88.15% over the same period,

suggesting that the model is effectively learning and generalizing from the training

data. The overall performance metrics indicate a successful training process with no

clear signs of over-fitting within the provided epochs.

'''

Model Analysis Setup

Code Editor:

# -*- coding: utf-8 -*-

"""

Created on Wed Nov 29 06:02:43 2023

@author: ang

"""

import numpy as np

import pandas as pd

import tensorflow as tf

# Load in the train split data

x_train = np.load('./data/x_train.npy', allow_pickle=True)

y_train = np.load('./data/y_train.npy', allow_pickle=True)

# Load the trained model

flight_anomaly_model = tf.keras.models.load_model('./models/flight_anomaly_detection_model')

'''

So, our model here takes the flight data from NASA and flattens the array from

2 dimentions (160, 20) reshaped to (1, 3200). Then the data is

passed and evaluated through twelve dense layers. The last dense layer outputs the

predictions as a softmax array. Meaning, there are the same number of values between

0.0 and 1.0 in an array. The highest value is the label that the model predicts

in the order they exist at index 0, 1, 2, or 3.

To pass data into this model for prediction the data must be reshaped.

# E.x. where the predicted value is Nominal; index 0 is the highest probability

In []: _model.predict(x_train[0].reshape(1,3200)).round(3)

Out[]: array([[0.746, 0.209, 0.039, 0.006]], dtype=float32)

'''

'''

So we now have a trained model that will detect flight anomalies in the data.

We can look specifically at how the model performed on the training data by writing

our own custom function that returns a DataFrame.

'''

def get_multi_class_eval_dataframe():

'''

This function may take a long time to complete.

Returns

-------

df : Pandas DataFrame.

A DataFrame containing actual and missed values from the training data.

'''

print("Working...This may take a while...")

counter = 0

# Places the training predictions into a list

train_predictions_lst = []

for i in x_train[0:]:

train_predictions_lst.append(flight_anomaly_model.predict(i.reshape(1,3200),verbose=0).round(3))

counter += 1

if counter % 1000 == 0:

print(f"COUNT: {counter} of 79869")

# GET LISTS OF MISSED PREDICTIONS INDEX, ACTUAL VAL, AND PREDICTED VAL

missed_preds_idx = []

missed_preds_act_val = []

missed_preds_val_pred = []

for index, (first, second) in enumerate(zip(y_train, train_predictions_lst)):

if first != second.argmax():

missed_preds_idx.append(index)

missed_preds_act_val.append(first)

missed_preds_val_pred.append(second)

# BUILD THE DATAFRAME

df = pd.DataFrame({'missed_pred_idx':missed_preds_idx,

'missed_pred_act_val':missed_preds_act_val,

'missed_pred_val_pred':missed_preds_val_pred})

# ADD missed prediction predicted value COL from missed_preds_val_pred; argmax from probability array.

argmax_lst = []

for i in df.missed_pred_val_pred:

argmax_lst.append(i.argmax())

df['missed_pred_val_pred_argmax'] = argmax_lst

return df

# Commented out calling the function due to runtime concerns; table is saved as df_missed_predictions.csv

# df_missed_preds = get_multi_class_eval_dataframe()

Model Performance Analysis

Code Editor:

# -*- coding: utf-8 -*-

"""

Created on Wed Nov 29 06:02:43 2023

@author: ang

"""

import numpy as np

import pandas as pd

import tensorflow as tf

# Load in the training data.

x_train = np.load('./data/x_train.npy', allow_pickle=True)

y_train = np.load('./data/y_train.npy', allow_pickle=True)

# Load the trained model.

flight_anomaly_model = tf.keras.models.load_model('./models/flight_anomaly_detection_model')

# Load the predictions DataFrame.

df_missed_predictions = pd.read_csv(r".\data\df_missed_predictions.csv").iloc[0:,1:]

'''

So, our model here takes the flight data from NASA and flattens the array from

2 dimentions (160, 20) reshaped to (1, 3200). Then the data is

passed and evaluated through twelve dense layers. The last dense layer outputs the

predictions as a softmax array. Meaning, there are the same number of values between

0.0 and 1.0 in an array. The highest values index is the label that the model

predicts in the order they exist at index 0 (Nominal), 1 (Speed High),

2 (Path High), or 3 (Flaps Lable Setting).

To pass data into this model for prediction the data must be reshaped.

# E.x. where the predicted value is Nominal; index 0 is the highest probability

In []: _model.predict(x_train[0].reshape(1,3200)).round(3)

Out[]: array([[0.746, 0.209, 0.039, 0.006]], dtype=float32)

'''

'''

Here I have written several functions that simplify getting a prediction from the

model.

'''

def get_train_arr(idx_):

'''

Function gets a single array by index from the training data.

Parameters

----------

idx_ : Int.

Array at Int index.

Returns

-------

test_arr : Numpy Array.

Numpy array of flight data shape (1, 3200).

df_test : Pandas DataFrame.

Pandas DataFrame of the flight data.

'''

test_arr = x_train[idx_]

test_arr = test_arr.reshape(160,20)

df_test = pd.DataFrame(test_arr, columns=[

'AILERON POSITION LH',

'AILERON POSITION RH',

'CORRECTED ANGLE OF ATTACK',

'BARO CORRRECT ALTITUDE LSP',

'COMPUTED AIRSPEED LSP',

'SELECTED COURSE',

'DRIFT ANGLE',

'ELEVATOR POSITION LEFT',

'T.E. FLAP POSITION',

'GLIDESLOPE DEVIATION',

'SELECTED HEADING',

'LOCALIZER DEVIATION',

'CORE SPEED AVG',

'TOTAL PRESSURE LSP',

'PITCH ANGLE LSP',

'ROLL ANGLE LSP',

'RUDDER POSITION',

'TRUE HEADING LSP',

'VERTICAL ACCELERATION',

'WIND SPEED'

])

test_arr = test_arr.reshape(1, 3200)

return test_arr, df_test

def df_to_arr(some_df):

'''

Function takes a DataFrame of flight data and returns Numpy array

shape (1,3200).

Parameters

----------

some_df : Pandas DataFrame.

Pandas DataFrame of flight data.

Returns

-------

t_a : Numpy Array.

Numpy array of flight data shape (1, 3200).

'''

t_a = some_df.to_numpy().reshape(1,3200)

return t_a

def get_pred(some_data):

'''

Gets a probability distribution array from the flight_anomaly_model with a

passed Numpy Array or Pandas DataFrame.

Parameters

----------

some_data : A Numpy ndarray or Pandas DataFrame

A numpy array shape (1, 3200) or an Pandas DataFrame.

Returns

-------

preds : Numpy Array or None.

If conditions are met returns probability distribution as Numpy array.

'''

if type(some_data) == pd.core.frame.DataFrame and some_data.shape==(160, 20):

preds = flight_anomaly_model.predict(df_to_arr(some_data))

elif type(some_data) == np.ndarray and some_data.shape == (1, 3200):

preds = flight_anomaly_model.predict(some_data)

else:

preds = None

print('Argument must be a an numpy.ndarray of shape (1, 3200) or pandas.core.DataFrame of shape (160, 20)')

return preds.round(4)

Predictive Class Comparisons

Python Console:

'''

We have df_missed_predictions which contains;

missed_pred_idx -- the index of the missed prediction in the x_train data

missed_pred_act_val -- the actual value of the missed prediction

missed_pred_val_pred -- the value predicted as probability array

missed_pred_val_pred_argmax -- the integer label of the predicted class from missed_pred_val_pred

'''

In []: df_missed_predictions

Out[]:

missed_pred_idx missed_pred_act_val missed_pred_val_pred \

0 4 0.0 [[0.287 0.686 0.015 0.012]]

1 16 0.0 [[0.469 0.494 0.027 0.01 ]]

2 20 0.0 [[0.354 0.616 0.019 0.011]]

3 29 0.0 [[0.438 0.528 0.023 0.011]]

4 35 0.0 [[0.326 0.645 0.017 0.012]]

... ... ...

9483 79833 0.0 [[0.084 0.89 0.005 0.021]]

9484 79842 0.0 [[0.423 0.544 0.022 0.011]]

9485 79845 0.0 [[0.329 0.296 0.344 0.031]]

9486 79859 0.0 [[0.34 0.631 0.018 0.011]]

9487 79866 0.0 [[0.41 0.559 0.021 0.01 ]]

missed_pred_val_pred_argmax

0 1

1 1

2 1

3 1

4 1

...

9483 1

9484 1

9485 2

9486 1

9487 1

[9488 rows x 4 columns]

'''

We see here that the model misclassified 9488 predictions from the training data.

That is about 11.879% of the data. This means the model predicted 88.121% of the

training data correctly.

'''

'''

Next, we can look at the frequency with which the model misclassified each class.

'''

In []: df_missed_predictions.missed_pred_act_val.value_counts()

Out[]:

0.0 7157

1.0 2135

2.0 173

3.0 23

Name: missed_pred_act_val, dtype: int64

'''

When we checked the balance of the training labels we saw that Nominal was majority;

In []: df_labels_check_y_train.value_counts()

Out[]:

label

0.0 71730

1.0 5620

2.0 1773

3.0 746

dtype: int64

We see the distribution before Randomly Oversampling the minority classes. This

shows that, of the training data the model misclassified;

Class | % misclassified

Nominal 0.09

Speed High 0.38

Path High 0.1

Flaps Late Setting 0.03

Really the only class the model struggles to classify is Speed High. Now, let us

see what it is misclassifying Speed High as. Let us isolate the Speed High classifications

into its own DataFrame.

'''

In []: df_sh = df_missed_predictions.loc[df_missed_predictions['missed_pred_act_val']==1]

In []: df_sh

Out[]:

missed_pred_idx missed_pred_act_val missed_pred_val_pred \

10 121 1.0 [[0.713 0.243 0.038 0.007]]

12 135 1.0 [[0.561 0.4 0.03 0.008]]

15 149 1.0 [[0.795 0.157 0.043 0.005]]

17 167 1.0 [[0.581 0.381 0.03 0.009]]

20 178 1.0 [[0.828 0.121 0.046 0.004]]

... ... ...

9457 79650 1.0 [[0.506 0.458 0.026 0.01 ]]

9458 79654 1.0 [[0.637 0.322 0.033 0.008]]

9475 79789 1.0 [[0.691 0.266 0.036 0.007]]

9477 79795 1.0 [[0.611 0.349 0.031 0.009]]

9480 79811 1.0 [[0.871 0.074 0.052 0.003]]

missed_pred_val_pred_argmax

10 0

12 0

15 0

17 0

20 0

...

9457 0

9458 0

9475 0

9477 0

9480 0

[2135 rows x 4 columns]

'''

Now we can check the frequency of each value Speed High was predicted to be.

'''

In []: df_sh.missed_pred_val_pred_argmax.value_counts()

Out[]:

0 2005

2 80

3 50

Name: missed_pred_val_pred_argmax, dtype: int64

'''

We can see here that the model struggles to classify "Speed High" correctly

and distinguish between "Nominal" and "Speed High". The model tends to misclassify a

substantial portion of these instances, often predicting them as the majority class,

"Nominal". This observation underscores the need for targeted improvements to enhance

the model's discrimination capabilities, particularly in distinguishing between the

"Nominal" and "Speed High" classes.

Overall, the model exhibits commendable performance in correctly making predictions

as reflected in its high accuracy of 88.121%. Despite struggles with certain minority

classes, the model's ability to accurately classify the majority class highlights

its overall effectiveness. Further refinements and fine-tuning may be beneficial,

especially in addressing specific challenges associated with the "Speed High" class

misclassifications. Continued evaluation and iterative improvements can contribute

to enhancing the model's overall predictive capabilities.

'''